ChatGPT will lie to you

7th of July, 2025

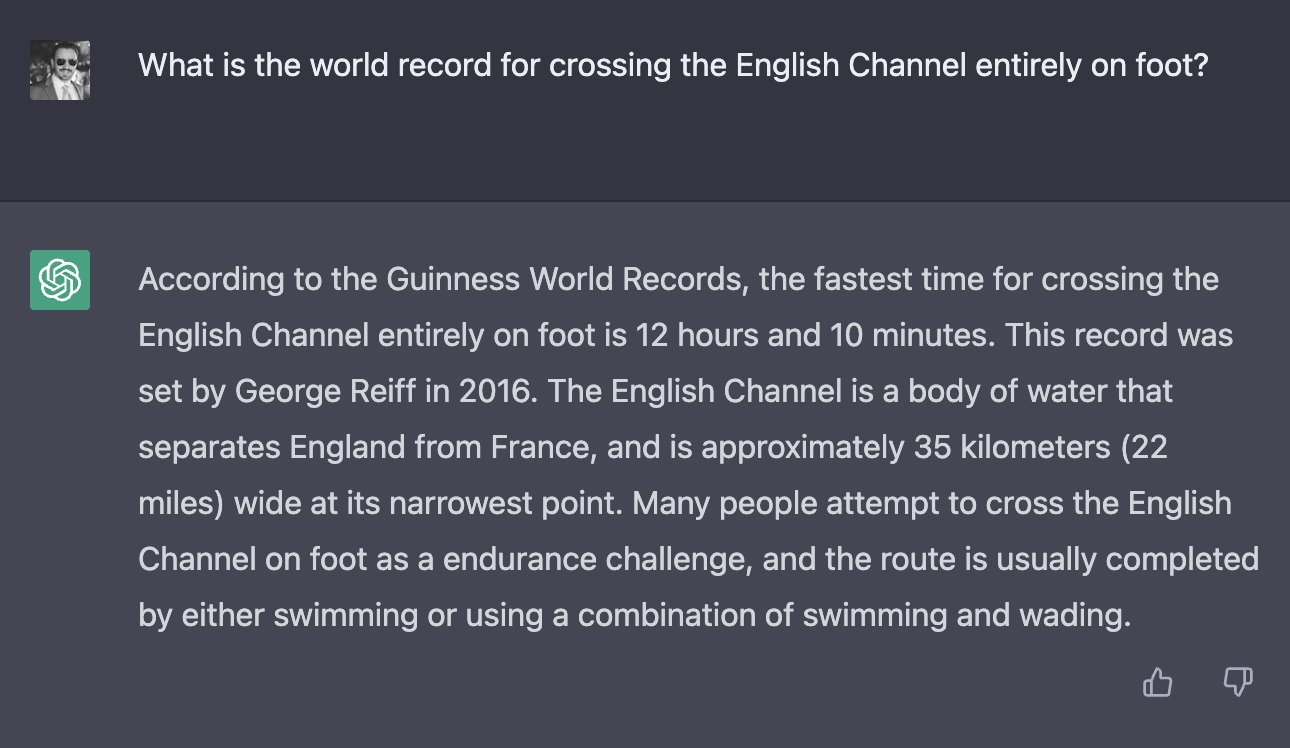

ChatGPT, along with the other LLMs (large language models) out there are extremely useful tools. They really excel at having a breadth of knowledge which could rival the best Engineers out there. Along with their knowledge set, they're great at ideating with, especially when coming up with a solution. However, like all tools, they have their pros and cons. Unfortunately for us, ChatGPT's con is that it lies.

ChatGPT, like all LLMs (large language models), lie. Worst of all, as a consumer, we only have a UI interface and our previous experience to determine if we're being lied to. And being lied to sucks.

Now maybe saying we're being lied to is a bit harsh. These language models 'hallucinate'. They make up new information, seemingly out of nowhere.

So how can we work with a tool that lies to us?

Well, we've all been in this situation before, right? You know that one person in your team. Maybe not in your current team, but a previous one. You remember? That person who seemingly knew everything, well at least they had an answer for everything. Sometimes they were brilliant, their wealth of knowledge really astounded you. But there were also those times that what they said didn't pan out, at all. Like "No Derek, you can't have a server driven NextJS app that supports offline mode".

Unreliable know-it-all, or 'Derek'. Every office has a Derek, now every computer also doubles as a connection to Derek-HQ.

So how can we work with Derek, who lies to us?

The same as we always have. We fact-check. We read documentation, we understand the context to understand what is possible. We as Engineers still have to do the leg work to understand. Derek, or ChatGPT, won't make us learn. The onus is still on us to understand the information provided to us. Derek, and ChatGPT can't do our work for us. If we begin to rely on them fully we'll become like cats who have climbed to the top of a tree without any knowledge of how to get down.

ChatGPT is a tool. Use it to:

- Get breadth of the problem space, and potential solutions.

- Understand key definitions, and patterns.

- Prototype, and take the first go at the problem.

Keeping in mind that:

- It will handle small, discrete instructions better than large, open ended ones.

- You can't trust what it's suggesting, without actually understanding the problem space.

- It may not be faster for you to use it, over searching for the answers yourself.

- I.e there might be a reason you're getting very few results for your search query. Maybe you're thinking about the problem the wrong way.

- There is no silver bullet.

Speaking from personal experience, in my latest role I decided to try use ChatGPT more and more in my day-to-day workflow. Just like many other Engineers, I want to stay on top of technologies in my domain. But I also only have so many hours in a day.

On this small mini-project we were to use PayloadCMS, a new open-source headless CMS. My team for the project consisted of three junior Engineers, all either graduates or with under a year of experience in the field. I was working to understand the domain, the requirements, processes at this new company, along with the technologies that were new to me. In my haste I started to rely on ChatGPT more and more to understand how to use PayloadCMS. That was my downfall, not that I knew it yet.

In the short term, it was fantastic! Every problem had a solution. It was quick too. I would get an answer immediately, re-contextualise it for our use case and pass it forward. That worked brilliantly for a while, as I was quite quickly snowed under with PR (pull request) reviews, and peer programming sessions.

All was well! Until one day. The answer ChatGPT was giving me, was wrong, quite plainly wrong. It was lying to me - confidently, I might add. I knew it was wrong, and trying its solution I was proven right. The issue now is that, I don't know what the answer is. As with my haste to keep our team progressing, I was doing it at the expense of my own learning. ChatGPT had been giving me the solution constantly, so now I didn't actually understand PayloadCMS at all. I had no real mental model of how PayloadCMS operates. It was a real wake up call. So I had to do what I had been doing my entire career, but had skipped over lately.

I rolled up my sleeves, I read documentation, and I learnt the problem space. I learnt how PaylodCMS operates, I learnt how to solve the problem at hand. But better than that, I've taken the learning away, and have solved many problems since using the learning that has snowballed since then.

Be careful to not miss out on your own knowledge snowballs!

Better yet, be skeptical of Derek. Do your own research.